We compute the support region on the sphere, which is required to compute the orientation and the descriptor.

Using the calibration, we map the extrema point detected to the sphere and define a vicinity proportional to the sin(sigma) where the point was detected. Then this vicinity is projected to the omnidirectional image and the orientation and magnitude gradients are computed. A weighted histogram is computed and its peak is selected as the orientation of the point.

Support region on the sphere

We observe that depending on the position on the sphere the support region in the image varies from circular to elliptical.

Support region for the descriptor

A similar process is followed to compute the descriptor corresponding to the detected feature. In this case the support region is divided in 36 bins. We verify the correctness of the orientation previously computed.

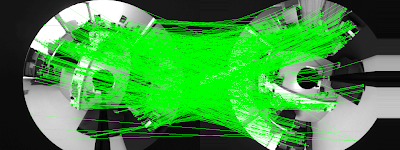

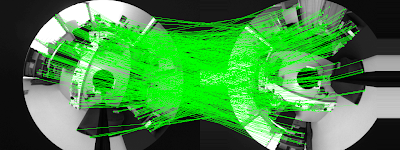

We can observe two examples of support regions over two omnidirectional images with the orientation previously computed

Good orientation

Image 1

Image 2

Bad orientation

Image 1

Image 2

This bad orientation computation can be caused because the orientation is computed with the gradients in the original image and not in the smoothed image where the feature was detected.